ONNX – Format for AI models for interoperability between frameworks 🪴🛠️

ONNX – Format for AI models for interoperability between frameworks 🪴🛠️

Couldn't load pickup availability

🧠 What is ONNX?

ONNX (Open Neural Network Exchange) is an open format designed to allow development teams to train models in one framework and deploy them in another without having to rewrite them. The goal is to reduce friction between different AI tools and accelerate the path from research to production. onnx.ai +2 onnx.ai +2

🌟 Advantages and special features

-

Framework independence : Training phase in, for example, PyTorch, deployment in TensorFlow or ONNX Runtime possible. Oracle Docs +2 onnx.ai +2

-

Performance optimization : ONNX Runtime offers optimizations for various hardware environments such as CPUs, GPUs, or specialized accelerators. vraspar.github.io +2 onnx.ai +2

-

Standardized graph structure : Models are stored as an acyclic computational graph with defined operators and data types. onnx.ai +2 onnx.ai +2

-

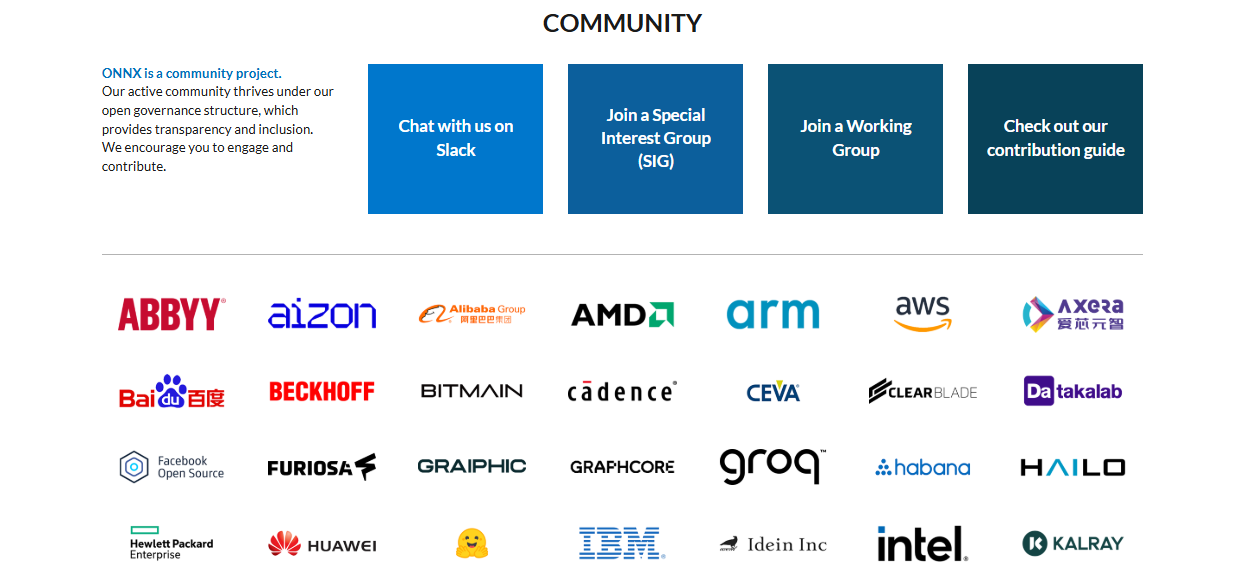

Large ecosystem : Many frameworks support exports to ONNX, and tools for visualization and optimization are available. GitHub +2 onnx.ai +2

🧭 Vision and Values

ONNX aims to create developer freedom, foster innovation, and prevent teams from being restricted by framework lock-in. The goal is an AI world where models and tools can collaborate without artificial barriers. onnx.ai

🧰 Possible uses

-

Exchange of models between different AI frameworks, e.g. training phase in one tool, deployment in another

-

Use in production environments with low latency through ONNX Runtime

-

Use in hardware-accelerated environments (e.g. GPUs, edge devices)

-

Visualization, debugging and optimization of models

-

Research and prototyping, e.g., development of new architectures, rapid iteration